Transforming an Expert into an Expert System

Software Development

Artificial Intelligence

B2B

Business Software

Expertise

Talking about how “software”, “computer systems” and “experts” come together and cutting through some of the AI hype.

SHARE POST

Wikipedia tells us “In artificial intelligence, an expert system is a computer system emulating the decision-making ability of a human expert.” I’m not sure why the “in artificial intelligence” is in that statement. Pick your favorite definition of artificial intelligence (AI). It’s a bit of a moving target but many want to claim that they produce or use it. Most definitions point to the idea of a computer doing something that you would normally expect would require human intelligence.

I’ve been helping people put expertise into software for the better part of 40 years. I had a course in “Expert Systems” in college and had a short love affair with the LISP programming language — the earliest favored program for AI research. I started my full-time professional career in the mid-80s at the beginning of the “AI craze” and joined a company named “Knowledge Systems” in 1988… just before the “AI Winter” when interest in funding AI research dried up due to lack of delivery of promised results.

But for the last decade or so, I’ve heard more and more people claiming or believing that AI is going to take over the world, and if you don’t get on the bandwagon, you’ll be replaced whether you are a business owner or a programmer or “data scientist.” Microsoft, IBM and other large companies tout how their AI is changing the world.

No doubt, there have been many advances in technologies that would certainly be considered under the AI umbrella. There are some things that many of us use almost everyday that have benefitted from some of those advances. As I type this, I benefit from technology that anticipates what words I might want to type next, and I hope cool stuff like that keeps coming.

But the reality is that the vast majority of software to help us improve what we do in the world today and in the near future need expertise that is not inherent or derived by a computer. My company builds software systems that are game changers for many businesses and their customers. They are — generally speaking — expert systems in that they provide the expertise that came from human experts and make them available through a computer system to those with less expertise. We rarely find any technology that would universally be called “AI” that is capable of providing any significant contribution — though we often would like it to.

One thing that is essential to most “AI” and the building of any software is a feedback loop and the ability to know what to do with that feedback.

I thought it might be worth a shot at talking about how “software”, “computer systems” and “experts” come together and cut through some of the AI hype.

Computers Are Not Intelligent, They Are Just Fast

Although there are those who think there may come a time when machines think, they certainly don’t do it today. They do what someone programs them to do through embedded hardware or software logic, and they do it really fast… and they have gotten faster and faster. Given structured data and an algorithm, they can sort through data very quickly. With a different algorithm, they can turn unstructured data into structured data very quickly. Given enough data in a given context, software systems can use statistics to predict things with a high degree of probability, much faster than a human can gather and process the data to get the same result.

But, the software system doesn’t know whether they predicted correctly or not. Someone who “knows” has to validate the system’s answers or decide that it is trustworthy enough to be useful. In a narrow enough problem with uncertainty as to what the “best answer” is, the software may come up with a better answer than any human can validate or not… How can we know? Do you think there is some expert at Google who can validate that the top hit for each particular search is actually the “best answer”?

Computers Are Not Experts, They Are Just Consistent

In 1980, the Dreyfus brothers created a model for skill acquisition. Although it has its critics, it rings true for most humans who’ve been around awhile and have observed the difference between novices in a skill set and the most advanced experts, and the people in between. These same people criticized AI in its early days, and the debate has gone back and forth.

Aim a software system at a set of data using a set of assumptions and it will consistently come up with the same answers. It can even be set up to question its assumptions given new observations and reassess its answers. Sounds like an expert to some extent.

There are times when this works great. It works great for Google because they can follow sets of rules to get people to popular places on the internet and make Google a lot of money on ads. But who hasn’t searched for something on the internet via Google (or any search engine) and found the results wanting? Why? There is not an intelligent dialog going on between an expert and someone seeking the expertise to get an answer.

Computers Don’t Learn, They Process Data

Well, OK, in one sense they CAN learn. But they have to be told to collect data. They have to have someone produce the data in a way they can access. They have to be told what to do with that data.

Even if computers are set up to “learn” from that data, it is probably for a specific goal. For example, Google scans the internet for lots of words (and images, and other data) with the assumption that people are going to search for the information that those words “mean”. Google has lots of data and has done a lot of processing.

Based on Google’s success, would anyone like a software system (or series of systems) to take that data and create an innovative vehicle to get people from a point in New York City to Sydney, Australia without a human involved? I’m not talking about a Map or Flight plan, I am talking about a vehicle that will safely get you there. If you think so, will you be the first one to volunteer to sit in its seat?

There may be valuable information that has been culled from which humans can learn to help create such a vehicle, and there are robots that can help build it. But we are far from being able to have a computer or series of computers accomplish this goal without humans.

We are certainly not against “machine learning” but the reality is that computers need to be trained, too. And the cost of training can be VERY high, often needing hundreds of thousands of data sets, many humans to help validate the patterns found (which also brings human error into play), and further quality control measures to accomplish even the smallest task.

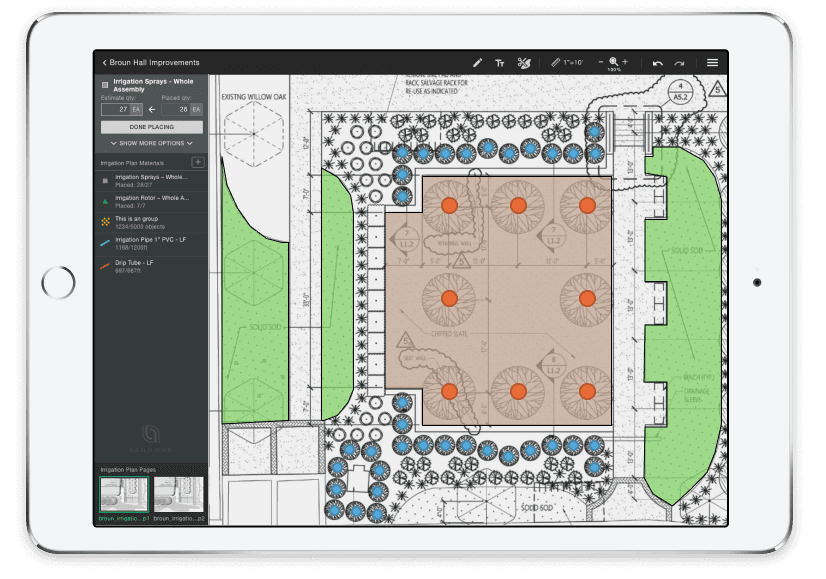

“Pattern recognition” is something computers can theoretically do very fast. But they aren’t very good at reducing noise like humans are. For example, we humans can look at a landscape architect’s plans and recognize a pattern that they may have used for a shrub, and count them up. If there are 197 of those same shrubs, we are prone to miss a couple or get distracted when counting. However, the subtle variations in the unstructured data require massive amounts of training data for machine learning (ML) models to begin to recognize and separate the noise.

We recently gave a series of such drawings to a machine learning program from a top tech company, pointed it to some of the patterns that we told it was a shrub, and it couldn’t count any of them on the drawings. We encountered better results as we supplied different “control” files showing the assumptions of the learning program may be non-intuitive and tightly constrained. There were other lines on the drawing that threw it off, in addition to an obviously less than perfect algorithm. We have no doubt that given enough data, the correct set of assumptions, control data, and more we could have eventually had much more success. Then we’d have to do it again for each of various other types of shrubs, trees, flowers, etc. Of course, when should a slight variation of a pattern point to the same type of shrub/tree and when should it identify a new shrub/tree pattern. Without a controlled set of patterns — and making sure all architects who might supply our drawings comply with those patterns — the utility of the system we “trained” could have very limited value.

Where Probability Matters, Computers Might Help

Because computers are fast and can process lots of data, they can help pretty quickly deduce some things with some level of precision. When you type in “expert systems” in Google you will get some answers that are “probably” relevant. They have looked for words and patterns of words in a lot of content all over the internet, and then looked at the kind of things people click on when they put in the same words, as well as a lot of data. But type in something more specific, like “parody of bohemian rhapsody related to the Kansas City Chiefs 1993 Joe Montana” and you won’t get anything helpful at all. (I’ve been looking for years for this parody I heard in 1993 on a radio station in Kansas City). Often, the more specific and obscure the search, the lesser chance you’ll find a satisfactory result.

Where Accuracy Matters, Computers Might Help

Computers are consistent or they don’t work at all. They do math pretty well. (NOTE: rounding errors have existed in computers for floating point math forever and it seems to be more common in JavaScript). So, assuming the computer program has reliable rules for figuring something out, given accurate inputs, you will get accurate output. Of course, if the system is missing relevant rules, you may still get output.

An answer produced by a computer isn’t inherently more accurate than an answer produced by a human… especially an expert human.

Where Expertise Matters, Find an Expert

Today, I talked to an expert in alternative energy, and an expert in designing cooling towers. They both have used many computer systems and have found them wanting. They are paid a lot of money for their particular expertise. The latter uses industry leading computer-aided design (CAD) software, and they were speaking to me because the software system that they are using doesn’t understand that they are designing a cooling tower. Is the software helpful to the designer? Absolutely. But it can’t even begin the process of designing a cooling tower or find flawed assumptions that could be disastrous without the expert human to guide it.

The expert can design the cooling tower without the software, just not as fast. Without an expert user, the CAD program wouldn’t know where to start if you told it that you needed a cooling tower and gave it the sketchy input the designer gets every time he’s asked to design one. The software doesn’t know what questions to ask to get the missing or inaccurate information, how to check the validity of the information, where to begin to create the skeleton of the design, what special implications that design will have on its surroundings, etc.

… and Leverage Their Expertise

Expertise is hard earned. One way to leverage the knowledge of an expert is to hire them: either as an employee or a consultant. But reproducing that knowledge the expert possesses and expertly wields is difficult. Many experts turned business owners understand this at a very deep level.

It took me 8 years to train up a very talented individual to replace me in my role of CEO of a custom software development company with very intentional and concentrated time. Imparting my expertise to him took a lot of time, intentional input, feedback loops, and situated learning among many other factors. Along the way, there were things we discussed and I slowly turned over.

But there are other ways to leverage expertise. For example, one can find the tasks that have become well-understood by the expert and use software to make that expert unnecessary to perform those tasks. Many experts learn to do this themselves as a reflective practitioner who is using continuous improvement and automating the tasks that — to them — have become mundane.

Some things I had learned I put into algorithms in spreadsheets. They started simple, became more complex, then were simplified after a lot of discussion and testing. Some of them were eventually put into software tools. We now have more tools to monitor, measure, and summarize many of the things I had learned were important to maintain a healthy business and culture. We continued to learn and refine those things. We now have Key Performance Indicators (KPIs) that are monitored weekly and reflect the health of a company that has significantly grown. Instead of the hours each week I used to spend putting some things into a spreadsheet and intuitively trying to identify, we now have access to more accurate health signals at a glance with very little human intervention.

Extracting and Automating Expertise is an Expert Skill and Computers Don’t Have It

I’ve been involved in the creation of software for the better part of 40 years. When I went to college to learn about it, I was usually provided with specifications that gave me just about all the information I needed to build the software. Every once in a while, I was given creative freedom to make up some information. For example, I was supposed to make an airline reservation system and could guess at what information I needed… no airline required.

When I started my professional career, I was sometimes given detailed specifications, and sometimes given some ideas to explore. It wasn’t until I got into the “consulting” world 33 years ago that I started to learn how to extract information from people. Most had some idea about how software could make their jobs more efficient and effective or provide a service that would improve the lives of others. Most of them didn’t have clear “specifications”. They may not even have had clear “goals”.

The Agile Manifesto was written by some expert software developers. The signers had many years of experience building software for others. There have certainly been excellent practices and process improvements that have followed this inspirational document. But many have raised the “agile” banner and ended up with subpar or even dysfunctional results. I believe this is because the “preamble” of the agile manifesto is often overlooked.

“We are uncovering better ways of developing software by doing it and helping others do it…”

There is expertise necessary to help an expert (or rising expert) turn their goals into working software that encapsulates expertise. It doesn’t just happen by hiring “rockstar coders”. It doesn’t just happen by following a set of rules or practices. It doesn’t happen magically through machine learning.

We have built many systems which encapsulate expertise for our clients, and we are not the only ones that have done so. We leverage technologies like “rules engines”, “APIs to other systems that automate some expertise”, and occasionally some machine learning amongst a growing set of accessible technologies. But most people who need software to improve what they do need expertise of others expertly extracted and turned into a system — leveraging computers — to do things more efficiently or make it more available to others.

Right now, “AI” has seen a resurgence as a buzzword and many tech companies claim that their AI technology is changing the world. In the mid-late 80s it was also a hot buzzword. My previous employer — founded in the late 80s — even named itself Knowledge Systems Corporation. One of the things we did in the 80s was to examine various “Expert System Tools” and apply them to various types of systems. We found that there was only a small portion of those systems that really benefited from the “AI” technology, and the rest just needed a good programming language and good programmers to analyze, design, and build the system.

The same is true today. Yes, the technology has moved forward from some of the naive approaches to produce “artificial intelligence” of the 80s. The horsepower of our computers and the access to information has grown by leaps and bounds. But one thing that remains the same is that the vast majority of software that is needed requires humans — very skilled humans who have gained hard earned expertise — to both extract expert knowledge and analyze, design, and build a system that is an expert at something others would like to leverage.

You can’t wish that kind of software into existence. Humans aren’t just a bunch of data that you can throw processors at to reproduce. The humans are still the experts. We are in the business of amplifying hard-earned expertise. Computers and the technology developed on top of them are just a set of tools we can use to help us do that.

We work in a tight feedback loop with an expert to extract their knowledge a bit at a time and produce a “walking skeleton” of the system they imagine. We continue to extract, reflect, and refine with the expert. As soon as possible, we put it into the hands of those who can benefit from the captured expertise to bring them into the feedback loop.

And that’s how we produce an expert system.